Artificial Neural Network. More...

Namespaces | |

| augmented | |

Classes | |

| class | AdaptiveMaxPooling |

| Implementation of the AdaptiveMaxPooling layer. More... | |

| class | AdaptiveMeanPooling |

| Implementation of the AdaptiveMeanPooling. More... | |

| class | Add |

| Implementation of the Add module class. More... | |

| class | AddMerge |

| Implementation of the AddMerge module class. More... | |

| class | AddVisitor |

| AddVisitor exposes the Add() method of the given module. More... | |

| class | AlphaDropout |

| The alpha - dropout layer is a regularizer that randomly with probability 'ratio' sets input values to alphaDash. More... | |

| class | AtrousConvolution |

| Implementation of the Atrous Convolution class. More... | |

| class | BackwardVisitor |

| BackwardVisitor executes the Backward() function given the input, error and delta parameter. More... | |

| class | BaseLayer |

| Implementation of the base layer. More... | |

| class | BatchNorm |

| Declaration of the Batch Normalization layer class. More... | |

| class | BCELoss |

| The binary-cross-entropy performance function measures the Binary Cross Entropy between the target and the output. More... | |

| class | BernoulliDistribution |

| Multiple independent Bernoulli distributions. More... | |

| class | BiasSetVisitor |

| BiasSetVisitor updates the module bias parameters given the parameters set. More... | |

| class | BicubicInterpolation |

| Definition and Implementation of the Bicubic Interpolation Layer. More... | |

| class | BilinearInterpolation |

| Definition and Implementation of the Bilinear Interpolation Layer. More... | |

| class | BinaryRBM |

| For more information, see the following paper: More... | |

| class | BRNN |

| Implementation of a standard bidirectional recurrent neural network container. More... | |

| class | CELU |

| The CELU activation function, defined by. More... | |

| class | ChannelShuffle |

| Definition and implementation of the Channel Shuffle Layer. More... | |

| class | Concat |

| Implementation of the Concat class. More... | |

| class | Concatenate |

| Implementation of the Concatenate module class. More... | |

| class | ConcatPerformance |

| Implementation of the concat performance class. More... | |

| class | Constant |

| Implementation of the constant layer. More... | |

| class | ConstInitialization |

| This class is used to initialize weight matrix with constant values. More... | |

| class | Convolution |

| Implementation of the Convolution class. More... | |

| class | CopyVisitor |

| This visitor is to support copy constructor for neural network module. More... | |

| class | CosineEmbeddingLoss |

| Cosine Embedding Loss function is used for measuring whether two inputs are similar or dissimilar, using the cosine distance, and is typically used for learning nonlinear embeddings or semi-supervised learning. More... | |

| class | CReLU |

| A concatenated ReLU has two outputs, one ReLU and one negative ReLU, concatenated together. More... | |

| class | DCGAN |

| For more information, see the following paper: More... | |

| class | DeleteVisitor |

| DeleteVisitor executes the destructor of the instantiated object. More... | |

| class | DeltaVisitor |

| DeltaVisitor exposes the delta parameter of the given module. More... | |

| class | DeterministicSetVisitor |

| DeterministicSetVisitor set the deterministic parameter given the deterministic value. More... | |

| class | DiceLoss |

| The dice loss performance function measures the network's performance according to the dice coefficient between the input and target distributions. More... | |

| class | DropConnect |

| The DropConnect layer is a regularizer that randomly with probability ratio sets the connection values to zero and scales the remaining elements by factor 1 /(1 - ratio). More... | |

| class | Dropout |

| The dropout layer is a regularizer that randomly with probability 'ratio' sets input values to zero and scales the remaining elements by factor 1 / (1 - ratio) rather than during test time so as to keep the expected sum same. More... | |

| class | EarthMoverDistance |

| The earth mover distance function measures the network's performance according to the Kantorovich-Rubinstein duality approximation. More... | |

| class | ElishFunction |

| The ELiSH function, defined by. More... | |

| class | ElliotFunction |

| The Elliot function, defined by. More... | |

| class | ELU |

| The ELU activation function, defined by. More... | |

| class | EmptyLoss |

| The empty loss does nothing, letting the user calculate the loss outside the model. More... | |

| class | FastLSTM |

| An implementation of a faster version of the Fast LSTM network layer. More... | |

| class | FFN |

| Implementation of a standard feed forward network. More... | |

| class | FFTConvolution |

| Computes the two-dimensional convolution through fft. More... | |

| class | FlattenTSwish |

| The Flatten T Swish activation function, defined by. More... | |

| class | FlexibleReLU |

| The FlexibleReLU activation function, defined by. More... | |

| class | ForwardVisitor |

| ForwardVisitor executes the Forward() function given the input and output parameter. More... | |

| class | FullConvolution |

| class | GAN |

| The implementation of the standard GAN module. More... | |

| class | GaussianFunction |

| The gaussian function, defined by. More... | |

| class | GaussianInitialization |

| This class is used to initialize weigth matrix with a gaussian. More... | |

| class | GELUFunction |

| The GELU function, defined by. More... | |

| class | Glimpse |

| The glimpse layer returns a retina-like representation (down-scaled cropped images) of increasing scale around a given location in a given image. More... | |

| class | GlorotInitializationType |

| This class is used to initialize the weight matrix with the Glorot Initialization method. More... | |

| class | GradientSetVisitor |

| GradientSetVisitor update the gradient parameter given the gradient set. More... | |

| class | GradientUpdateVisitor |

| GradientUpdateVisitor update the gradient parameter given the gradient set. More... | |

| class | GradientVisitor |

| SearchModeVisitor executes the Gradient() method of the given module using the input and delta parameter. More... | |

| class | GradientZeroVisitor |

| class | GroupNorm |

| Declaration of the Group Normalization class. More... | |

| class | GRU |

| An implementation of a gru network layer. More... | |

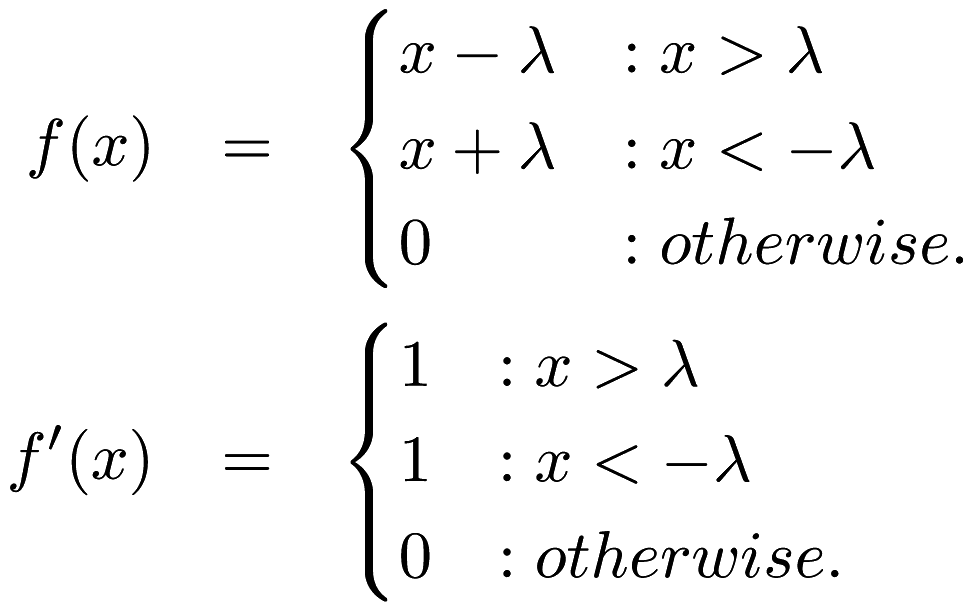

| class | HardShrink |

| Hard Shrink operator is defined as,. More... | |

| class | HardSigmoidFunction |

| The hard sigmoid function, defined by. More... | |

| class | HardSwishFunction |

| The Hard Swish function, defined by. More... | |

| class | HardTanH |

| The Hard Tanh activation function, defined by. More... | |

| class | HeInitialization |

| This class is used to initialize weight matrix with the He initialization rule given by He et. More... | |

| class | Highway |

| Implementation of the Highway layer. More... | |

| class | HingeEmbeddingLoss |

| The Hinge Embedding loss function is often used to compute the loss between y_true and y_pred. More... | |

| class | HingeLoss |

Computes the hinge loss between  and and  . More... . More... | |

| class | HuberLoss |

| The Huber loss is a loss function used in robust regression, that is less sensitive to outliers in data than the squared error loss. More... | |

| class | IdentityFunction |

| The identity function, defined by. More... | |

| class | InitTraits |

| This is a template class that can provide information about various initialization methods. More... | |

| class | InitTraits< KathirvalavakumarSubavathiInitialization > |

| Initialization traits of the kathirvalavakumar subavath initialization rule. More... | |

| class | InitTraits< NguyenWidrowInitialization > |

| Initialization traits of the Nguyen-Widrow initialization rule. More... | |

| class | InShapeVisitor |

| InShapeVisitor returns the input shape a Layer expects. More... | |

| class | InvQuadFunction |

| The Inverse Quadratic function, defined by. More... | |

| class | ISRLU |

| The ISRLU activation function, defined by. More... | |

| class | Join |

| Implementation of the Join module class. More... | |

| class | KathirvalavakumarSubavathiInitialization |

| This class is used to initialize the weight matrix with the method proposed by T. More... | |

| class | KLDivergence |

| The Kullback–Leibler divergence is often used for continuous distributions (direct regression). More... | |

| class | L1Loss |

| The L1 loss is a loss function that measures the mean absolute error (MAE) between each element in the input x and target y. More... | |

| class | LayerNorm |

| Declaration of the Layer Normalization class. More... | |

| class | LayerTraits |

| This is a template class that can provide information about various layers. More... | |

| class | LeakyReLU |

| The LeakyReLU activation function, defined by. More... | |

| class | LecunNormalInitialization |

| This class is used to initialize weight matrix with the Lecun Normalization initialization rule. More... | |

| class | Linear |

| Implementation of the Linear layer class. More... | |

| class | Linear3D |

| Implementation of the Linear3D layer class. More... | |

| class | LinearNoBias |

| Implementation of the LinearNoBias class. More... | |

| class | LiSHTFunction |

| The LiSHT function, defined by. More... | |

| class | LoadOutputParameterVisitor |

| LoadOutputParameterVisitor restores the output parameter using the given parameter set. More... | |

| class | LogCoshLoss |

| The Log-Hyperbolic-Cosine loss function is often used to improve variational auto encoder. More... | |

| class | LogisticFunction |

| The logistic function, defined by. More... | |

| class | LogSoftMax |

| Implementation of the log softmax layer. More... | |

| class | Lookup |

| The Lookup class stores word embeddings and retrieves them using tokens. More... | |

| class | LossVisitor |

| LossVisitor exposes the Loss() method of the given module. More... | |

| class | LpPooling |

| Implementation of the LPPooling. More... | |

| class | LRegularizer |

| The L_p regularizer for arbitrary integer p. More... | |

| class | LSTM |

| Implementation of the LSTM module class. More... | |

| class | MarginRankingLoss |

| Margin ranking loss measures the loss given inputs and a label vector with values of 1 or -1. More... | |

| class | MaxPooling |

| Implementation of the MaxPooling layer. More... | |

| class | MaxPoolingRule |

| class | MeanAbsolutePercentageError |

| The mean absolute percentage error performance function measures the network's performance according to the mean of the absolute difference between input and target divided by target. More... | |

| class | MeanBiasError |

| The mean bias error performance function measures the network's performance according to the mean of errors. More... | |

| class | MeanPooling |

| Implementation of the MeanPooling. More... | |

| class | MeanPoolingRule |

| class | MeanSquaredError |

| The mean squared error performance function measures the network's performance according to the mean of squared errors. More... | |

| class | MeanSquaredLogarithmicError |

| The mean squared logarithmic error performance function measures the network's performance according to the mean of squared logarithmic errors. More... | |

| class | MiniBatchDiscrimination |

| Implementation of the MiniBatchDiscrimination layer. More... | |

| class | MishFunction |

| The Mish function, defined by. More... | |

| class | MultiheadAttention |

| Multihead Attention allows the model to jointly attend to information from different representation subspaces at different positions. More... | |

| class | MultiLabelSoftMarginLoss |

| class | MultiplyConstant |

| Implementation of the multiply constant layer. More... | |

| class | MultiplyMerge |

| Implementation of the MultiplyMerge module class. More... | |

| class | MultiQuadFunction |

| The Multi Quadratic function, defined by. More... | |

| class | NaiveConvolution |

| Computes the two-dimensional convolution. More... | |

| class | NearestInterpolation |

| Definition and Implementation of the Nearest Interpolation Layer. More... | |

| class | NegativeLogLikelihood |

| Implementation of the negative log likelihood layer. More... | |

| class | NetworkInitialization |

| This class is used to initialize the network with the given initialization rule. More... | |

| class | NguyenWidrowInitialization |

| This class is used to initialize the weight matrix with the Nguyen-Widrow method. More... | |

| class | NoisyLinear |

| Implementation of the NoisyLinear layer class. More... | |

| class | NoRegularizer |

| Implementation of the NoRegularizer. More... | |

| class | NormalDistribution |

| Implementation of the Normal Distribution function. More... | |

| class | OivsInitialization |

| This class is used to initialize the weight matrix with the oivs method. More... | |

| class | OrthogonalInitialization |

| This class is used to initialize the weight matrix with the orthogonal matrix initialization. More... | |

| class | OrthogonalRegularizer |

| Implementation of the OrthogonalRegularizer. More... | |

| class | OutputHeightVisitor |

| OutputHeightVisitor exposes the OutputHeight() method of the given module. More... | |

| class | OutputParameterVisitor |

| OutputParameterVisitor exposes the output parameter of the given module. More... | |

| class | OutputWidthVisitor |

| OutputWidthVisitor exposes the OutputWidth() method of the given module. More... | |

| class | Padding |

| Implementation of the Padding module class. More... | |

| class | ParametersSetVisitor |

| ParametersSetVisitor update the parameters set using the given matrix. More... | |

| class | ParametersVisitor |

| ParametersVisitor exposes the parameters set of the given module and stores the parameters set into the given matrix. More... | |

| class | PixelShuffle |

| Implementation of the PixelShuffle layer. More... | |

| class | Poisson1Function |

| The Poisson one function, defined by. More... | |

| class | PoissonNLLLoss |

| Implementation of the Poisson negative log likelihood loss. More... | |

| class | PositionalEncoding |

| Positional Encoding injects some information about the relative or absolute position of the tokens in the sequence. More... | |

| class | PReLU |

| The PReLU activation function, defined by (where alpha is trainable) More... | |

| class | QuadraticFunction |

| The Quadratic function, defined by. More... | |

| class | RandomInitialization |

| This class is used to initialize randomly the weight matrix. More... | |

| class | RBF |

| Implementation of the Radial Basis Function layer. More... | |

| class | RBM |

| The implementation of the RBM module. More... | |

| class | ReconstructionLoss |

| The reconstruction loss performance function measures the network's performance equal to the negative log probability of the target with the input distribution. More... | |

| class | RectifierFunction |

| The rectifier function, defined by. More... | |

| class | Recurrent |

| Implementation of the RecurrentLayer class. More... | |

| class | RecurrentAttention |

| This class implements the Recurrent Model for Visual Attention, using a variety of possible layer implementations. More... | |

| class | ReinforceNormal |

| Implementation of the reinforce normal layer. More... | |

| class | ReLU6 |

| class | Reparametrization |

| Implementation of the Reparametrization layer class. More... | |

| class | ResetCellVisitor |

| ResetCellVisitor executes the ResetCell() function. More... | |

| class | ResetVisitor |

| ResetVisitor executes the Reset() function. More... | |

| class | RewardSetVisitor |

| RewardSetVisitor set the reward parameter given the reward value. More... | |

| class | RNN |

| Implementation of a standard recurrent neural network container. More... | |

| class | RunSetVisitor |

| RunSetVisitor set the run parameter given the run value. More... | |

| class | SaveOutputParameterVisitor |

| SaveOutputParameterVisitor saves the output parameter into the given parameter set. More... | |

| class | Select |

| The select module selects the specified column from a given input matrix. More... | |

| class | Sequential |

| Implementation of the Sequential class. More... | |

| class | SetInputHeightVisitor |

| SetInputHeightVisitor updates the input height parameter with the given input height. More... | |

| class | SetInputWidthVisitor |

| SetInputWidthVisitor updates the input width parameter with the given input width. More... | |

| class | SigmoidCrossEntropyError |

| The SigmoidCrossEntropyError performance function measures the network's performance according to the cross-entropy function between the input and target distributions. More... | |

| class | SILUFunction |

| The SILU function, defined by. More... | |

| class | SoftMarginLoss |

| class | Softmax |

| Implementation of the Softmax layer. More... | |

| class | Softmin |

| Implementation of the Softmin layer. More... | |

| class | SoftplusFunction |

| The softplus function, defined by. More... | |

| class | SoftShrink |

| Soft Shrink operator is defined as,

. More... | |

| class | SoftsignFunction |

| The softsign function, defined by. More... | |

| class | SpatialDropout |

| Implementation of the SpatialDropout layer. More... | |

| class | SpikeSlabRBM |

| For more information, see the following paper: More... | |

| class | SplineFunction |

| The Spline function, defined by. More... | |

| class | StandardGAN |

| For more information, see the following paper: More... | |

| class | Subview |

| Implementation of the subview layer. More... | |

| class | SVDConvolution |

| Computes the two-dimensional convolution using singular value decomposition. More... | |

| class | SwishFunction |

| The swish function, defined by. More... | |

| class | TanhExpFunction |

| The TanhExp function, defined by. More... | |

| class | TanhFunction |

| The tanh function, defined by. More... | |

| class | TransposedConvolution |

| Implementation of the Transposed Convolution class. More... | |

| class | TripletMarginLoss |

| The Triplet Margin Loss performance function measures the network's performance according to the relative distance from the anchor input of the positive (truthy) and negative (falsy) inputs. More... | |

| class | ValidConvolution |

| class | VirtualBatchNorm |

| Declaration of the VirtualBatchNorm layer class. More... | |

| class | VRClassReward |

| Implementation of the variance reduced classification reinforcement layer. More... | |

| class | WeightNorm |

| Declaration of the WeightNorm layer class. More... | |

| class | WeightSetVisitor |

| WeightSetVisitor update the module parameters given the parameters set. More... | |

| class | WeightSizeVisitor |

| WeightSizeVisitor returns the number of weights of the given module. More... | |

| class | WGAN |

| For more information, see the following paper: More... | |

| class | WGANGP |

| For more information, see the following paper: More... | |

Typedefs | |

template < typename InputDataType = arma::mat , typename OutputDataType = arma::mat > | |

| using | CrossEntropyError = BCELoss< InputDataType, OutputDataType > |

| Adding alias of BCELoss. More... | |

template < class ActivationFunction = LogisticFunction , typename InputDataType = arma::mat , typename OutputDataType = arma::mat > | |

| using | CustomLayer = BaseLayer< ActivationFunction, InputDataType, OutputDataType > |

| Standard Sigmoid layer. More... | |

template < class ActivationFunction = ElishFunction , typename InputDataType = arma::mat , typename OutputDataType = arma::mat > | |

| using | ElishFunctionLayer = BaseLayer< ActivationFunction, InputDataType, OutputDataType > |

| Standard ELiSH-Layer using the ELiSH activation function. More... | |

template < class ActivationFunction = ElliotFunction , typename InputDataType = arma::mat , typename OutputDataType = arma::mat > | |

| using | ElliotFunctionLayer = BaseLayer< ActivationFunction, InputDataType, OutputDataType > |

| Standard Elliot-Layer using the Elliot activation function. More... | |

template < typename MatType = arma::mat > | |

| using | Embedding = Lookup< MatType, MatType > |

template < class ActivationFunction = GaussianFunction , typename InputDataType = arma::mat , typename OutputDataType = arma::mat > | |

| using | GaussianFunctionLayer = BaseLayer< ActivationFunction, InputDataType, OutputDataType > |

| Standard Gaussian-Layer using the Gaussian activation function. More... | |

template < class ActivationFunction = GELUFunction , typename InputDataType = arma::mat , typename OutputDataType = arma::mat > | |

| using | GELUFunctionLayer = BaseLayer< ActivationFunction, InputDataType, OutputDataType > |

| Standard GELU-Layer using the GELU activation function. More... | |

| using | GlorotInitialization = GlorotInitializationType< false > |

| GlorotInitialization uses uniform distribution. More... | |

template < class ActivationFunction = HardSigmoidFunction , typename InputDataType = arma::mat , typename OutputDataType = arma::mat > | |

| using | HardSigmoidLayer = BaseLayer< ActivationFunction, InputDataType, OutputDataType > |

| Standard HardSigmoid-Layer using the HardSigmoid activation function. More... | |

template < class ActivationFunction = HardSwishFunction , typename InputDataType = arma::mat , typename OutputDataType = arma::mat > | |

| using | HardSwishFunctionLayer = BaseLayer< ActivationFunction, InputDataType, OutputDataType > |

| Standard HardSwish-Layer using the HardSwish activation function. More... | |

template < class ActivationFunction = IdentityFunction , typename InputDataType = arma::mat , typename OutputDataType = arma::mat > | |

| using | IdentityLayer = BaseLayer< ActivationFunction, InputDataType, OutputDataType > |

| Standard Identity-Layer using the identity activation function. More... | |

| typedef LRegularizer< 1 > | L1Regularizer |

| The L1 Regularizer. More... | |

| typedef LRegularizer< 2 > | L2Regularizer |

| The L2 Regularizer. More... | |

| template<typename... CustomLayers> | |

| using | LayerTypes = boost::variant< AdaptiveMaxPooling< arma::mat, arma::mat > *, AdaptiveMeanPooling< arma::mat, arma::mat > *, Add< arma::mat, arma::mat > *, AddMerge< arma::mat, arma::mat > *, AlphaDropout< arma::mat, arma::mat > *, AtrousConvolution< NaiveConvolution< ValidConvolution >, NaiveConvolution< FullConvolution >, NaiveConvolution< ValidConvolution >, arma::mat, arma::mat > *, BaseLayer< LogisticFunction, arma::mat, arma::mat > *, BaseLayer< IdentityFunction, arma::mat, arma::mat > *, BaseLayer< TanhFunction, arma::mat, arma::mat > *, BaseLayer< SoftplusFunction, arma::mat, arma::mat > *, BaseLayer< RectifierFunction, arma::mat, arma::mat > *, BatchNorm< arma::mat, arma::mat > *, BilinearInterpolation< arma::mat, arma::mat > *, CELU< arma::mat, arma::mat > *, Concat< arma::mat, arma::mat > *, Concatenate< arma::mat, arma::mat > *, ConcatPerformance< NegativeLogLikelihood< arma::mat, arma::mat >, arma::mat, arma::mat > *, Constant< arma::mat, arma::mat > *, Convolution< NaiveConvolution< ValidConvolution >, NaiveConvolution< FullConvolution >, NaiveConvolution< ValidConvolution >, arma::mat, arma::mat > *, CReLU< arma::mat, arma::mat > *, DropConnect< arma::mat, arma::mat > *, Dropout< arma::mat, arma::mat > *, ELU< arma::mat, arma::mat > *, FastLSTM< arma::mat, arma::mat > *, GRU< arma::mat, arma::mat > *, HardTanH< arma::mat, arma::mat > *, Join< arma::mat, arma::mat > *, LayerNorm< arma::mat, arma::mat > *, LeakyReLU< arma::mat, arma::mat > *, Linear< arma::mat, arma::mat, NoRegularizer > *, LinearNoBias< arma::mat, arma::mat, NoRegularizer > *, LogSoftMax< arma::mat, arma::mat > *, Lookup< arma::mat, arma::mat > *, LSTM< arma::mat, arma::mat > *, MaxPooling< arma::mat, arma::mat > *, MeanPooling< arma::mat, arma::mat > *, MiniBatchDiscrimination< arma::mat, arma::mat > *, MultiplyConstant< arma::mat, arma::mat > *, MultiplyMerge< arma::mat, arma::mat > *, NegativeLogLikelihood< arma::mat, arma::mat > *, NoisyLinear< arma::mat, arma::mat > *, Padding< arma::mat, arma::mat > *, PReLU< arma::mat, arma::mat > *, Sequential< arma::mat, arma::mat, false > *, Sequential< arma::mat, arma::mat, true > *, Softmax< arma::mat, arma::mat > *, TransposedConvolution< NaiveConvolution< ValidConvolution >, NaiveConvolution< ValidConvolution >, NaiveConvolution< ValidConvolution >, arma::mat, arma::mat > *, WeightNorm< arma::mat, arma::mat > *, MoreTypes, CustomLayers *... > |

template < class ActivationFunction = LiSHTFunction , typename InputDataType = arma::mat , typename OutputDataType = arma::mat > | |

| using | LiSHTFunctionLayer = BaseLayer< ActivationFunction, InputDataType, OutputDataType > |

| Standard LiSHT-Layer using the LiSHT activation function. More... | |

template < class ActivationFunction = MishFunction , typename InputDataType = arma::mat , typename OutputDataType = arma::mat > | |

| using | MishFunctionLayer = BaseLayer< ActivationFunction, InputDataType, OutputDataType > |

| Standard Mish-Layer using the Mish activation function. More... | |

| using | MoreTypes = boost::variant< FlexibleReLU< arma::mat, arma::mat > *, Linear3D< arma::mat, arma::mat, NoRegularizer > *, LpPooling< arma::mat, arma::mat > *, PixelShuffle< arma::mat, arma::mat > *, ChannelShuffle< arma::mat, arma::mat > *, Glimpse< arma::mat, arma::mat > *, Highway< arma::mat, arma::mat > *, MultiheadAttention< arma::mat, arma::mat, NoRegularizer > *, Recurrent< arma::mat, arma::mat > *, RecurrentAttention< arma::mat, arma::mat > *, ReinforceNormal< arma::mat, arma::mat > *, ReLU6< arma::mat, arma::mat > *, Reparametrization< arma::mat, arma::mat > *, Select< arma::mat, arma::mat > *, SpatialDropout< arma::mat, arma::mat > *, Subview< arma::mat, arma::mat > *, VRClassReward< arma::mat, arma::mat > *, VirtualBatchNorm< arma::mat, arma::mat > *, RBF< arma::mat, arma::mat, GaussianFunction > *, BaseLayer< GaussianFunction, arma::mat, arma::mat > *, PositionalEncoding< arma::mat, arma::mat > *, ISRLU< arma::mat, arma::mat > *, BicubicInterpolation< arma::mat, arma::mat > *, NearestInterpolation< arma::mat, arma::mat > *, GroupNorm< arma::mat, arma::mat > *> |

template < class ActivationFunction = RectifierFunction , typename InputDataType = arma::mat , typename OutputDataType = arma::mat > | |

| using | ReLULayer = BaseLayer< ActivationFunction, InputDataType, OutputDataType > |

| Standard rectified linear unit non-linearity layer. More... | |

| template<typename InputDataType = arma::mat, typename OutputDataType = arma::mat, typename... CustomLayers> | |

| using | Residual = Sequential< InputDataType, OutputDataType, true, CustomLayers... > |

| using | SELU = ELU< arma::mat, arma::mat > |

template < class ActivationFunction = LogisticFunction , typename InputDataType = arma::mat , typename OutputDataType = arma::mat > | |

| using | SigmoidLayer = BaseLayer< ActivationFunction, InputDataType, OutputDataType > |

| Standard Sigmoid-Layer using the logistic activation function. More... | |

template < class ActivationFunction = SILUFunction , typename InputDataType = arma::mat , typename OutputDataType = arma::mat > | |

| using | SILUFunctionLayer = BaseLayer< ActivationFunction, InputDataType, OutputDataType > |

| Standard SILU-Layer using the SILU activation function. More... | |

template < class ActivationFunction = SoftplusFunction , typename InputDataType = arma::mat , typename OutputDataType = arma::mat > | |

| using | SoftPlusLayer = BaseLayer< ActivationFunction, InputDataType, OutputDataType > |

| Standard Softplus-Layer using the Softplus activation function. More... | |

template < class ActivationFunction = SwishFunction , typename InputDataType = arma::mat , typename OutputDataType = arma::mat > | |

| using | SwishFunctionLayer = BaseLayer< ActivationFunction, InputDataType, OutputDataType > |

| Standard Swish-Layer using the Swish activation function. More... | |

template < class ActivationFunction = TanhExpFunction , typename InputDataType = arma::mat , typename OutputDataType = arma::mat > | |

| using | TanhExpFunctionLayer = BaseLayer< ActivationFunction, InputDataType, OutputDataType > |

| Standard TanhExp-Layer using the TanhExp activation function. More... | |

template < class ActivationFunction = TanhFunction , typename InputDataType = arma::mat , typename OutputDataType = arma::mat > | |

| using | TanHLayer = BaseLayer< ActivationFunction, InputDataType, OutputDataType > |

| Standard hyperbolic tangent layer. More... | |

| using | XavierInitialization = GlorotInitializationType< true > |

| XavierInitilization is the popular name for this method. More... | |

Functions | |

template < typename T > | |

| void | CheckInputShape (const T &network, const size_t inputShape, const std::string &functionName) |

| HAS_ANY_METHOD_FORM (Model, HasModelCheck) | |

| HAS_ANY_METHOD_FORM (InputShape, HasInputShapeCheck) | |

| HAS_MEM_FUNC (Gradient, HasGradientCheck) | |

| HAS_MEM_FUNC (Deterministic, HasDeterministicCheck) | |

| HAS_MEM_FUNC (Parameters, HasParametersCheck) | |

| HAS_MEM_FUNC (Add, HasAddCheck) | |

| HAS_MEM_FUNC (Location, HasLocationCheck) | |

| HAS_MEM_FUNC (Reset, HasResetCheck) | |

| HAS_MEM_FUNC (ResetCell, HasResetCellCheck) | |

| HAS_MEM_FUNC (Reward, HasRewardCheck) | |

| HAS_MEM_FUNC (InputWidth, HasInputWidth) | |

| HAS_MEM_FUNC (InputHeight, HasInputHeight) | |

| HAS_MEM_FUNC (Rho, HasRho) | |

| HAS_MEM_FUNC (Loss, HasLoss) | |

| HAS_MEM_FUNC (Run, HasRunCheck) | |

| HAS_MEM_FUNC (Bias, HasBiasCheck) | |

| HAS_MEM_FUNC (MaxIterations, HasMaxIterations) | |

template < typename ModelType > | |

| double | InceptionScore (ModelType Model, arma::mat images, size_t splits=1) |

| Function that computes Inception Score for a set of images produced by a GAN. More... | |

Detailed Description

Artificial Neural Network.

Artifical Neural Network.

Typedef Documentation

◆ CrossEntropyError

| using CrossEntropyError = BCELoss< InputDataType, OutputDataType> |

Adding alias of BCELoss.

Definition at line 110 of file binary_cross_entropy_loss.hpp.

◆ CustomLayer

| using CustomLayer = BaseLayer< ActivationFunction, InputDataType, OutputDataType> |

Standard Sigmoid layer.

Definition at line 31 of file custom_layer.hpp.

◆ ElishFunctionLayer

| using ElishFunctionLayer = BaseLayer< ActivationFunction, InputDataType, OutputDataType> |

Standard ELiSH-Layer using the ELiSH activation function.

Definition at line 273 of file base_layer.hpp.

◆ ElliotFunctionLayer

| using ElliotFunctionLayer = BaseLayer< ActivationFunction, InputDataType, OutputDataType> |

Standard Elliot-Layer using the Elliot activation function.

Definition at line 262 of file base_layer.hpp.

◆ Embedding

Definition at line 142 of file lookup.hpp.

◆ GaussianFunctionLayer

| using GaussianFunctionLayer = BaseLayer< ActivationFunction, InputDataType, OutputDataType> |

Standard Gaussian-Layer using the Gaussian activation function.

Definition at line 284 of file base_layer.hpp.

◆ GELUFunctionLayer

| using GELUFunctionLayer = BaseLayer< ActivationFunction, InputDataType, OutputDataType> |

Standard GELU-Layer using the GELU activation function.

Definition at line 251 of file base_layer.hpp.

◆ GlorotInitialization

| using GlorotInitialization = GlorotInitializationType<false> |

GlorotInitialization uses uniform distribution.

Definition at line 200 of file glorot_init.hpp.

◆ HardSigmoidLayer

| using HardSigmoidLayer = BaseLayer< ActivationFunction, InputDataType, OutputDataType> |

Standard HardSigmoid-Layer using the HardSigmoid activation function.

Definition at line 207 of file base_layer.hpp.

◆ HardSwishFunctionLayer

| using HardSwishFunctionLayer = BaseLayer< ActivationFunction, InputDataType, OutputDataType> |

Standard HardSwish-Layer using the HardSwish activation function.

Definition at line 295 of file base_layer.hpp.

◆ IdentityLayer

| using IdentityLayer = BaseLayer< ActivationFunction, InputDataType, OutputDataType> |

Standard Identity-Layer using the identity activation function.

Definition at line 163 of file base_layer.hpp.

◆ L1Regularizer

| typedef LRegularizer<1> L1Regularizer |

The L1 Regularizer.

Definition at line 62 of file lregularizer.hpp.

◆ L2Regularizer

| typedef LRegularizer<2> L2Regularizer |

The L2 Regularizer.

Definition at line 67 of file lregularizer.hpp.

◆ LayerTypes

| using LayerTypes = boost::variant< AdaptiveMaxPooling<arma::mat, arma::mat>*, AdaptiveMeanPooling<arma::mat, arma::mat>*, Add<arma::mat, arma::mat>*, AddMerge<arma::mat, arma::mat>*, AlphaDropout<arma::mat, arma::mat>*, AtrousConvolution<NaiveConvolution<ValidConvolution>, NaiveConvolution<FullConvolution>, NaiveConvolution<ValidConvolution>, arma::mat, arma::mat>*, BaseLayer<LogisticFunction, arma::mat, arma::mat>*, BaseLayer<IdentityFunction, arma::mat, arma::mat>*, BaseLayer<TanhFunction, arma::mat, arma::mat>*, BaseLayer<SoftplusFunction, arma::mat, arma::mat>*, BaseLayer<RectifierFunction, arma::mat, arma::mat>*, BatchNorm<arma::mat, arma::mat>*, BilinearInterpolation<arma::mat, arma::mat>*, CELU<arma::mat, arma::mat>*, Concat<arma::mat, arma::mat>*, Concatenate<arma::mat, arma::mat>*, ConcatPerformance<NegativeLogLikelihood<arma::mat, arma::mat>, arma::mat, arma::mat>*, Constant<arma::mat, arma::mat>*, Convolution<NaiveConvolution<ValidConvolution>, NaiveConvolution<FullConvolution>, NaiveConvolution<ValidConvolution>, arma::mat, arma::mat>*, CReLU<arma::mat, arma::mat>*, DropConnect<arma::mat, arma::mat>*, Dropout<arma::mat, arma::mat>*, ELU<arma::mat, arma::mat>*, FastLSTM<arma::mat, arma::mat>*, GRU<arma::mat, arma::mat>*, HardTanH<arma::mat, arma::mat>*, Join<arma::mat, arma::mat>*, LayerNorm<arma::mat, arma::mat>*, LeakyReLU<arma::mat, arma::mat>*, Linear<arma::mat, arma::mat, NoRegularizer>*, LinearNoBias<arma::mat, arma::mat, NoRegularizer>*, LogSoftMax<arma::mat, arma::mat>*, Lookup<arma::mat, arma::mat>*, LSTM<arma::mat, arma::mat>*, MaxPooling<arma::mat, arma::mat>*, MeanPooling<arma::mat, arma::mat>*, MiniBatchDiscrimination<arma::mat, arma::mat>*, MultiplyConstant<arma::mat, arma::mat>*, MultiplyMerge<arma::mat, arma::mat>*, NegativeLogLikelihood<arma::mat, arma::mat>*, NoisyLinear<arma::mat, arma::mat>*, Padding<arma::mat, arma::mat>*, PReLU<arma::mat, arma::mat>*, Sequential<arma::mat, arma::mat, false>*, Sequential<arma::mat, arma::mat, true>*, Softmax<arma::mat, arma::mat>*, TransposedConvolution<NaiveConvolution<ValidConvolution>, NaiveConvolution<ValidConvolution>, NaiveConvolution<ValidConvolution>, arma::mat, arma::mat>*, WeightNorm<arma::mat, arma::mat>*, MoreTypes, CustomLayers*... > |

Definition at line 317 of file layer_types.hpp.

◆ LiSHTFunctionLayer

| using LiSHTFunctionLayer = BaseLayer< ActivationFunction, InputDataType, OutputDataType> |

Standard LiSHT-Layer using the LiSHT activation function.

Definition at line 240 of file base_layer.hpp.

◆ MishFunctionLayer

| using MishFunctionLayer = BaseLayer< ActivationFunction, InputDataType, OutputDataType> |

Standard Mish-Layer using the Mish activation function.

Definition at line 229 of file base_layer.hpp.

◆ MoreTypes

| using MoreTypes = boost::variant< FlexibleReLU<arma::mat, arma::mat>*, Linear3D<arma::mat, arma::mat, NoRegularizer>*, LpPooling<arma::mat, arma::mat>*, PixelShuffle<arma::mat, arma::mat>*, ChannelShuffle<arma::mat, arma::mat>*, Glimpse<arma::mat, arma::mat>*, Highway<arma::mat, arma::mat>*, MultiheadAttention<arma::mat, arma::mat, NoRegularizer>*, Recurrent<arma::mat, arma::mat>*, RecurrentAttention<arma::mat, arma::mat>*, ReinforceNormal<arma::mat, arma::mat>*, ReLU6<arma::mat, arma::mat>*, Reparametrization<arma::mat, arma::mat>*, Select<arma::mat, arma::mat>*, SpatialDropout<arma::mat, arma::mat>*, Subview<arma::mat, arma::mat>*, VRClassReward<arma::mat, arma::mat>*, VirtualBatchNorm<arma::mat, arma::mat>*, RBF<arma::mat, arma::mat, GaussianFunction>*, BaseLayer<GaussianFunction, arma::mat, arma::mat>*, PositionalEncoding<arma::mat, arma::mat>*, ISRLU<arma::mat, arma::mat>*, BicubicInterpolation<arma::mat, arma::mat>*, NearestInterpolation<arma::mat, arma::mat>*, GroupNorm<arma::mat, arma::mat>* > |

Definition at line 255 of file layer_types.hpp.

◆ ReLULayer

Standard rectified linear unit non-linearity layer.

Definition at line 174 of file base_layer.hpp.

◆ Residual

| using Residual = Sequential< InputDataType, OutputDataType, true, CustomLayers...> |

Definition at line 259 of file sequential.hpp.

◆ SELU

◆ SigmoidLayer

| using SigmoidLayer = BaseLayer< ActivationFunction, InputDataType, OutputDataType> |

Standard Sigmoid-Layer using the logistic activation function.

Definition at line 152 of file base_layer.hpp.

◆ SILUFunctionLayer

| using SILUFunctionLayer = BaseLayer< ActivationFunction, InputDataType, OutputDataType > |

Standard SILU-Layer using the SILU activation function.

Definition at line 318 of file base_layer.hpp.

◆ SoftPlusLayer

| using SoftPlusLayer = BaseLayer< ActivationFunction, InputDataType, OutputDataType> |

Standard Softplus-Layer using the Softplus activation function.

Definition at line 196 of file base_layer.hpp.

◆ SwishFunctionLayer

| using SwishFunctionLayer = BaseLayer< ActivationFunction, InputDataType, OutputDataType> |

Standard Swish-Layer using the Swish activation function.

Definition at line 218 of file base_layer.hpp.

◆ TanhExpFunctionLayer

| using TanhExpFunctionLayer = BaseLayer< ActivationFunction, InputDataType, OutputDataType> |

Standard TanhExp-Layer using the TanhExp activation function.

Definition at line 306 of file base_layer.hpp.

◆ TanHLayer

Standard hyperbolic tangent layer.

Definition at line 185 of file base_layer.hpp.

◆ XavierInitialization

| using XavierInitialization = GlorotInitializationType<true> |

XavierInitilization is the popular name for this method.

Definition at line 195 of file glorot_init.hpp.

Function Documentation

◆ CheckInputShape()

| void mlpack::ann::CheckInputShape | ( | const T & | network, |

| const size_t | inputShape, | ||

| const std::string & | functionName | ||

| ) |

Definition at line 25 of file check_input_shape.hpp.

◆ HAS_ANY_METHOD_FORM() [1/2]

| mlpack::ann::HAS_ANY_METHOD_FORM | ( | Model | , |

| HasModelCheck | |||

| ) |

◆ HAS_ANY_METHOD_FORM() [2/2]

| mlpack::ann::HAS_ANY_METHOD_FORM | ( | InputShape | , |

| HasInputShapeCheck | |||

| ) |

◆ HAS_MEM_FUNC() [1/15]

| mlpack::ann::HAS_MEM_FUNC | ( | Gradient | , |

| HasGradientCheck | |||

| ) |

◆ HAS_MEM_FUNC() [2/15]

| mlpack::ann::HAS_MEM_FUNC | ( | Deterministic | , |

| HasDeterministicCheck | |||

| ) |

◆ HAS_MEM_FUNC() [3/15]

| mlpack::ann::HAS_MEM_FUNC | ( | Parameters | , |

| HasParametersCheck | |||

| ) |

◆ HAS_MEM_FUNC() [4/15]

| mlpack::ann::HAS_MEM_FUNC | ( | Add | , |

| HasAddCheck | |||

| ) |

◆ HAS_MEM_FUNC() [5/15]

| mlpack::ann::HAS_MEM_FUNC | ( | Location | , |

| HasLocationCheck | |||

| ) |

◆ HAS_MEM_FUNC() [6/15]

| mlpack::ann::HAS_MEM_FUNC | ( | Reset | , |

| HasResetCheck | |||

| ) |

◆ HAS_MEM_FUNC() [7/15]

| mlpack::ann::HAS_MEM_FUNC | ( | ResetCell | , |

| HasResetCellCheck | |||

| ) |

◆ HAS_MEM_FUNC() [8/15]

| mlpack::ann::HAS_MEM_FUNC | ( | Reward | , |

| HasRewardCheck | |||

| ) |

◆ HAS_MEM_FUNC() [9/15]

| mlpack::ann::HAS_MEM_FUNC | ( | InputWidth | , |

| HasInputWidth | |||

| ) |

◆ HAS_MEM_FUNC() [10/15]

| mlpack::ann::HAS_MEM_FUNC | ( | InputHeight | , |

| HasInputHeight | |||

| ) |

◆ HAS_MEM_FUNC() [11/15]

| mlpack::ann::HAS_MEM_FUNC | ( | Rho | , |

| HasRho | |||

| ) |

◆ HAS_MEM_FUNC() [12/15]

| mlpack::ann::HAS_MEM_FUNC | ( | Loss | , |

| HasLoss | |||

| ) |

◆ HAS_MEM_FUNC() [13/15]

| mlpack::ann::HAS_MEM_FUNC | ( | Run | , |

| HasRunCheck | |||

| ) |

◆ HAS_MEM_FUNC() [14/15]

| mlpack::ann::HAS_MEM_FUNC | ( | Bias | , |

| HasBiasCheck | |||

| ) |

◆ HAS_MEM_FUNC() [15/15]

| mlpack::ann::HAS_MEM_FUNC | ( | MaxIterations | , |

| HasMaxIterations | |||

| ) |

◆ InceptionScore()

| double mlpack::ann::InceptionScore | ( | ModelType | Model, |

| arma::mat | images, | ||

| size_t | splits = 1 |

||

| ) |

Function that computes Inception Score for a set of images produced by a GAN.

For more information, see the following.

- Parameters

-

Model Model for evaluating the quality of images. images Images generated by GAN. splits Number of splits to perform (default: 1).