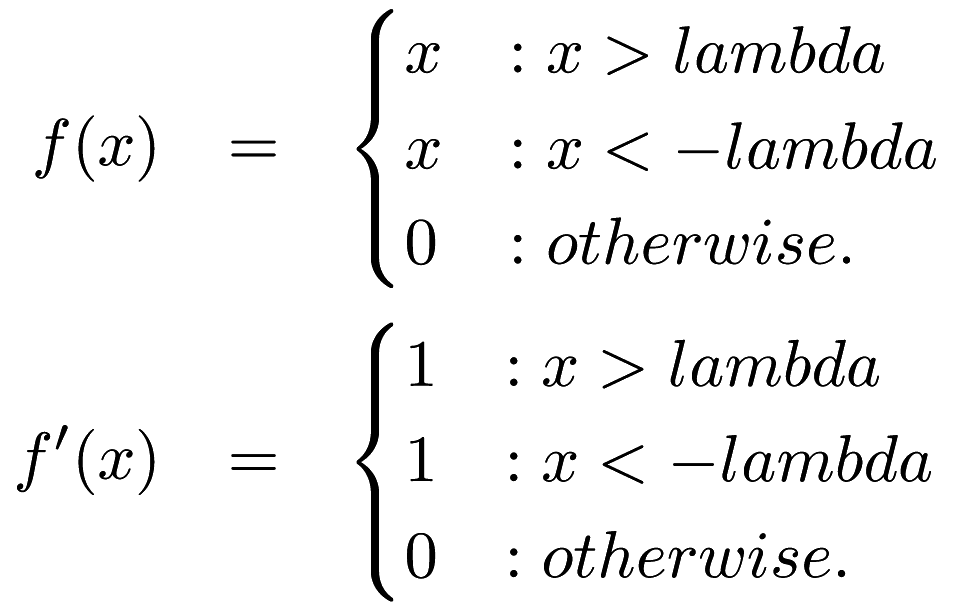

Hard Shrink operator is defined as,. More...

Public Member Functions | |

| HardShrink (const double lambda=0.5) | |

| Create HardShrink object using specified hyperparameter lambda. More... | |

template < typename DataType > | |

| void | Backward (const DataType &input, DataType &gy, DataType &g) |

| Ordinary feed backward pass of a neural network, calculating the function f(x) by propagating x backwards through f. More... | |

| OutputDataType const & | Delta () const |

| Get the delta. More... | |

| OutputDataType & | Delta () |

| Modify the delta. More... | |

template < typename InputType , typename OutputType > | |

| void | Forward (const InputType &input, OutputType &output) |

| Ordinary feed forward pass of a neural network, evaluating the function f(x) by propagating the activity forward through f. More... | |

| double const & | Lambda () const |

| Get the hyperparameter lambda. More... | |

| double & | Lambda () |

| Modify the hyperparameter lambda. More... | |

| OutputDataType const & | OutputParameter () const |

| Get the output parameter. More... | |

| OutputDataType & | OutputParameter () |

| Modify the output parameter. More... | |

template < typename Archive > | |

| void | serialize (Archive &ar, const uint32_t) |

| Serialize the layer. More... | |

Detailed Description

template<typenameInputDataType=arma::mat,typenameOutputDataType=arma::mat>

class mlpack::ann::HardShrink< InputDataType, OutputDataType >

Hard Shrink operator is defined as,.

is set to 0.5 by default.

is set to 0.5 by default.

Definition at line 45 of file hardshrink.hpp.

Constructor & Destructor Documentation

◆ HardShrink()

| HardShrink | ( | const double | lambda = 0.5 | ) |

Create HardShrink object using specified hyperparameter lambda.

- Parameters

-

lambda Is calculated by multiplying the noise level sigma of the input(noisy image) and a coefficient 'a' which is one of the training parameters. Default value of lambda is 0.5.

Member Function Documentation

◆ Backward()

| void Backward | ( | const DataType & | input, |

| DataType & | gy, | ||

| DataType & | g | ||

| ) |

Ordinary feed backward pass of a neural network, calculating the function f(x) by propagating x backwards through f.

Using the results from the feed forward pass.

- Parameters

-

input The propagated input activation f(x). gy The backpropagated error. g The calculated gradient.

◆ Delta() [1/2]

|

inline |

Get the delta.

Definition at line 88 of file hardshrink.hpp.

◆ Delta() [2/2]

|

inline |

Modify the delta.

Definition at line 90 of file hardshrink.hpp.

◆ Forward()

| void Forward | ( | const InputType & | input, |

| OutputType & | output | ||

| ) |

Ordinary feed forward pass of a neural network, evaluating the function f(x) by propagating the activity forward through f.

- Parameters

-

input Input data used for evaluating the Hard Shrink function. output Resulting output activation.

◆ Lambda() [1/2]

|

inline |

Get the hyperparameter lambda.

Definition at line 93 of file hardshrink.hpp.

◆ Lambda() [2/2]

|

inline |

Modify the hyperparameter lambda.

Definition at line 95 of file hardshrink.hpp.

References HardShrink< InputDataType, OutputDataType >::serialize().

◆ OutputParameter() [1/2]

|

inline |

Get the output parameter.

Definition at line 83 of file hardshrink.hpp.

◆ OutputParameter() [2/2]

|

inline |

Modify the output parameter.

Definition at line 85 of file hardshrink.hpp.

◆ serialize()

| void serialize | ( | Archive & | ar, |

| const uint32_t | |||

| ) |

Serialize the layer.

Referenced by HardShrink< InputDataType, OutputDataType >::Lambda().

The documentation for this class was generated from the following file:

- /home/ryan/src/mlpack.org/_src/mlpack-git/src/mlpack/methods/ann/layer/hardshrink.hpp