Go to the source code of this file.

Classes | |

| class | ELU< InputDataType, OutputDataType > |

| The ELU activation function, defined by. More... | |

Namespaces | |

| mlpack | |

Linear algebra utility functions, generally performed on matrices or vectors. | |

| mlpack::ann | |

Artificial Neural Network. | |

Typedefs | |

| using | SELU = ELU< arma::mat, arma::mat > |

Detailed Description

Definition of the ELU activation function as described by Djork-Arne Clevert, Thomas Unterthiner and Sepp Hochreiter.

Definition of the SELU function as introduced by Klambauer et. al. in Self Neural Networks. The SELU activation function keeps the mean and variance of the input invariant.

In short, SELU = lambda * ELU, with 'alpha' and 'lambda' fixed for normalized inputs.

Hence both ELU and SELU are implemented in the same file, with lambda = 1 for ELU function.

mlpack is free software; you may redistribute it and/or modify it under the terms of the 3-clause BSD license. You should have received a copy of the 3-clause BSD license along with mlpack. If not, see http://www.opensource.org/licenses/BSD-3-Clause for more information.

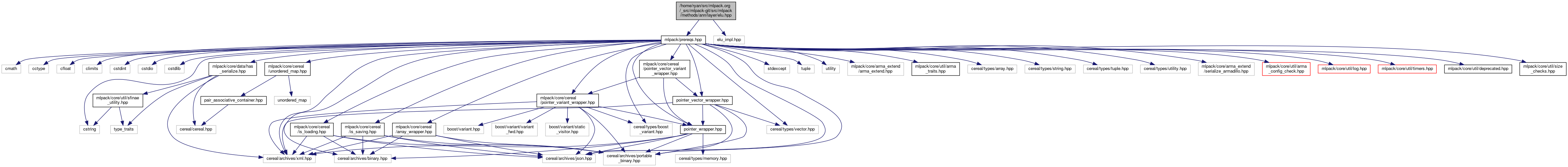

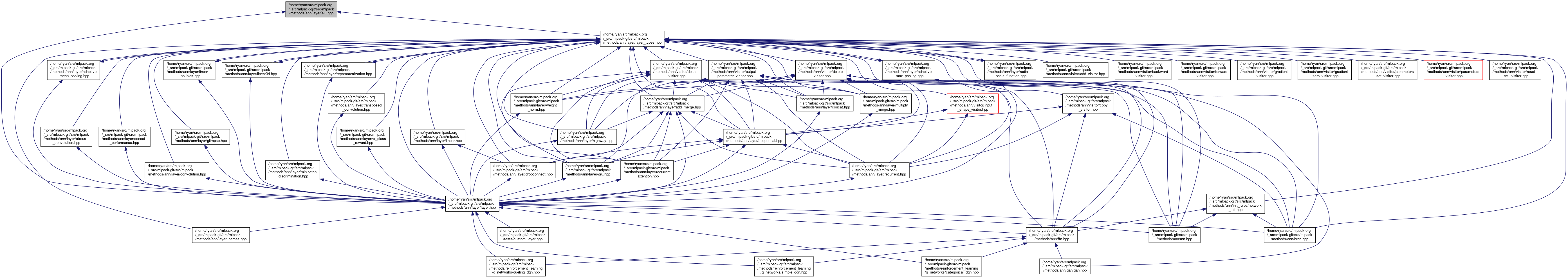

Definition in file elu.hpp.