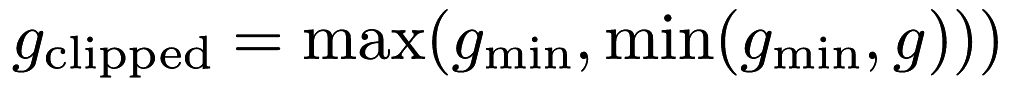

Interface for clipping the reward to some value between the specified maximum and minimum value (Clipping here is implemented as  .)

More...

.)

More...

Public Types | |

| using | Action = typename EnvironmentType::Action |

| Convenient typedef for action. More... | |

| using | State = typename EnvironmentType::State |

| Convenient typedef for state. More... | |

Public Member Functions | |

| RewardClipping (EnvironmentType &environment, const double minReward=-1.0, const double maxReward=1.0) | |

| Constructor for creating a RewardClipping instance. More... | |

| EnvironmentType & | Environment () const |

| Get the environment. More... | |

| EnvironmentType & | Environment () |

| Modify the environment. More... | |

| State | InitialSample () |

| The InitialSample method is called by the environment to initialize the starting state. More... | |

| bool | IsTerminal (const State &state) const |

| Checks whether given state is a terminal state. More... | |

| double | MaxReward () const |

| Get the maximum reward value. More... | |

| double & | MaxReward () |

| Modify the maximum reward value. More... | |

| double | MinReward () const |

| Get the minimum reward value. More... | |

| double & | MinReward () |

| Modify the minimum reward value. More... | |

| double | Sample (const State &state, const Action &action, State &nextState) |

| Dynamics of Environment. More... | |

| double | Sample (const State &state, const Action &action) |

| Dynamics of Environment. More... | |

Detailed Description

template<typenameEnvironmentType>

class mlpack::rl::RewardClipping< EnvironmentType >

Interface for clipping the reward to some value between the specified maximum and minimum value (Clipping here is implemented as  .)

.)

- Template Parameters

-

EnvironmentType A type of Environment that is being wrapped.

Definition at line 30 of file reward_clipping.hpp.

Member Typedef Documentation

◆ Action

| using Action = typename EnvironmentType::Action |

Convenient typedef for action.

Definition at line 37 of file reward_clipping.hpp.

◆ State

| using State = typename EnvironmentType::State |

Convenient typedef for state.

Definition at line 34 of file reward_clipping.hpp.

Constructor & Destructor Documentation

◆ RewardClipping()

|

inline |

Constructor for creating a RewardClipping instance.

- Parameters

-

minReward Minimum possible value of clipped reward. maxReward Maximum possible value of clipped reward. environment An instance of the environment used for actual simulations.

Definition at line 47 of file reward_clipping.hpp.

Member Function Documentation

◆ Environment() [1/2]

|

inline |

Get the environment.

Definition at line 113 of file reward_clipping.hpp.

◆ Environment() [2/2]

|

inline |

Modify the environment.

Definition at line 115 of file reward_clipping.hpp.

◆ InitialSample()

|

inline |

The InitialSample method is called by the environment to initialize the starting state.

Returns whatever Initial Sample is returned by the environment.

Definition at line 62 of file reward_clipping.hpp.

◆ IsTerminal()

|

inline |

Checks whether given state is a terminal state.

Returns the value by calling the environment method.

- Parameters

-

state desired state.

- Returns

- true if state is a terminal state, otherwise false.

Definition at line 74 of file reward_clipping.hpp.

◆ MaxReward() [1/2]

|

inline |

Get the maximum reward value.

Definition at line 123 of file reward_clipping.hpp.

◆ MaxReward() [2/2]

|

inline |

Modify the maximum reward value.

Definition at line 125 of file reward_clipping.hpp.

◆ MinReward() [1/2]

|

inline |

Get the minimum reward value.

Definition at line 118 of file reward_clipping.hpp.

◆ MinReward() [2/2]

|

inline |

Modify the minimum reward value.

Definition at line 120 of file reward_clipping.hpp.

◆ Sample() [1/2]

Dynamics of Environment.

The rewards returned from the base environment are clipped according the maximum and minimum values specified.

- Parameters

-

state The current state. action The current action. nextState The next state.

- Returns

- clippedReward, Reward clipped between [minReward, maxReward].

Definition at line 88 of file reward_clipping.hpp.

References mlpack::math::ClampRange().

Referenced by RewardClipping< EnvironmentType >::Sample().

◆ Sample() [2/2]

Dynamics of Environment.

The rewards returned from the base environment are clipped according the maximum and minimum values specified.

- Parameters

-

state The current state. action The current action.

- Returns

- clippedReward, Reward clipped between [minReward, maxReward].

Definition at line 106 of file reward_clipping.hpp.

References RewardClipping< EnvironmentType >::Sample().

The documentation for this class was generated from the following file:

- /home/ryan/src/mlpack.org/_src/mlpack-git/src/mlpack/methods/reinforcement_learning/environment/reward_clipping.hpp